This repository is the official implementation of the paper "Task-oriented Sequential Grounding in 3D Sceness".

- [ 2024.09 ] Release codes of data generation!

- [ 2024.08 ] Release codes of model!

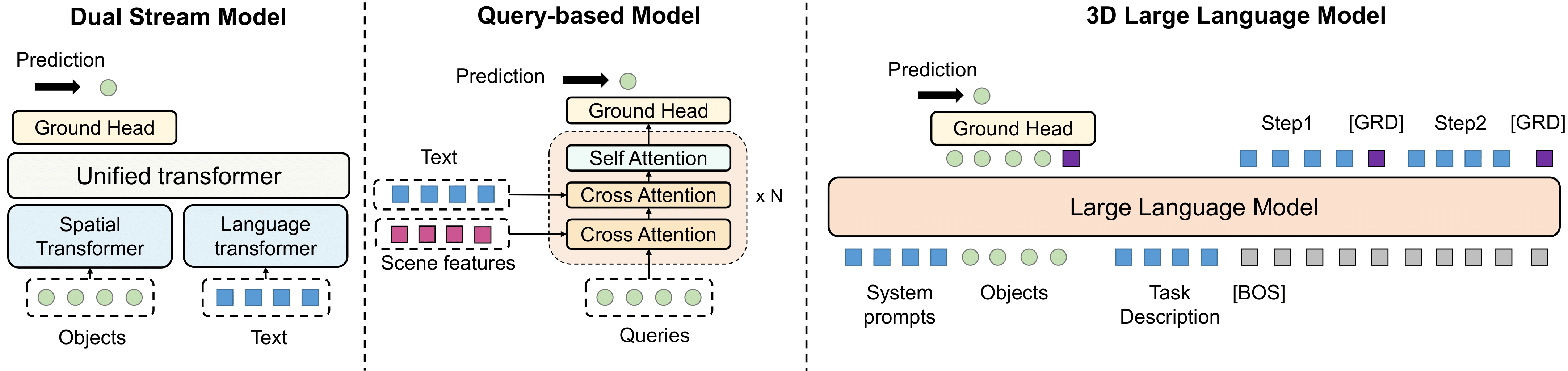

Grounding natural language in physical 3D environments is essential for the advancement of embodied artificial intelligence. Current datasets and models for 3D visual grounding predominantly focus on identifying and localizing objects from static, object-centric descriptions. These approaches do not adequately address the dynamic and sequential nature of task-oriented grounding necessary for practical applications. In this work, we propose a new task: Task-oriented Sequential Grounding in 3D scenes, wherein an agent must follow detailed step-by-step instructions to complete daily activities by locating a sequence of target objects in indoor scenes. To facilitate this task, we introduce SG3D, a large-scale dataset containing 22,346 tasks with 112,236 steps across 4,895 real-world 3D scenes. The dataset is constructed using a combination of RGB-D scans from various 3D scene datasets and an automated task generation pipeline, followed by human verification for quality assurance. We adapted three state-of-the-art 3D visual grounding models to the sequential grounding task and evaluated their performance on SG3D. Our results reveal that while these models perform well on traditional benchmarks, they face significant challenges with task-oriented sequential grounding, underscoring the need for further research in this area.

- Install conda package

conda env create --name envname

pip3 install torch==2.0.0

pip3 install torchvision==0.15.1

pip3 install -r requirements.txt

- install pointnet2

cd modules/third_party

# PointNet2

cd pointnet2

python setup.py install

cd ..

- download sceneverse data from scene_verse_base and change

data.scene_verse_baseto sceneverse data directory. - download segment level data from scene_ver_aux and change

data.scene_verse_auxto download data directory. - download other data from scene_verse_pred and change

data.scene_verse_predto download data directory. - download sequential grounding checkpoint and data from sequential-grounding and change

data.sequential_grounding_baseto download directory, changepretrained_weights_dirto downloaded pointnet dir. - download Vicuna-7B form Vicuna and change

model.llm.cfg_path - change TBD in config

Training

python3 run.py --config-path configs/vista --config-name sequential-sceneverse.yaml

Testing

python3 run.py --config-path configs/vista --config-name sequential-sceneverse.yaml pretrain_ckpt_path=PATH

Training

python3 run.py --config-path configs/query3d --config-name sequential-sceneverse.yaml

Testing

python3 run.py --config-path configs/query3d --config-name sequential-sceneverse.yaml pretrain_ckpt_path=PATH

Training

python3 run.py --config-path configs/sequential --config-name sequential-sceneverse.yaml

Testing

python3 run.py --config-path configs/sequential--config-name sequential-sceneverse.yaml pretrain_ckpt_path=PATH

For multi-gpu training usage.

python launch.py --mode ${launch_mode} \

--qos=${qos} --partition=${partition} --gpu_per_node=4 --port=29512 --mem_per_gpu=80 \

--config {config} \

We have released our task data generation scripts in the data_generation/ directory. For detailed instructions, refer to the data generation guide.

We would like to thank the authors of LEO, Vil3dref, Mask3d, Openscene, Xdecoder, and 3D-VisTA for their open-source release.