-

Notifications

You must be signed in to change notification settings - Fork 54

Example: Dense Depth Reconstruction with Block Matching Algorithm

#Dense Depth Reconstruction with Block Matching Algorithm

To see the whole code of this example: link

###Introduction This tutorial shows how to implement dense depth reconstruction from two images using the block matching algorithm.

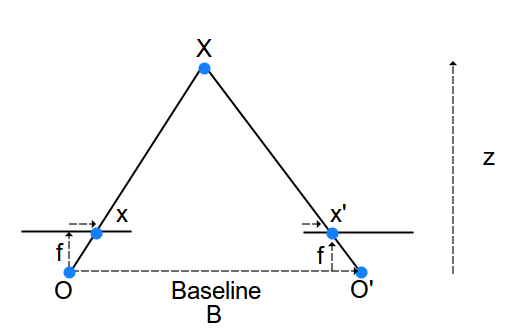

Using two images we are able to infer the depth by knowing where two corresponding pixels are. It is an important task in many applications in computer vision like object recognition, localization and mapping. The diagram below shows how to compute the depth using two images.

We have object X and its projection in both cameras (x and x'). By knowing the correspondent points in both images, we can calculate the disparity, which will give us the difference between the location of the object from the left image to the right image. see the equation used for this calculation below. When objects are close to the camera this difference is big, when objects are far this difference is small.

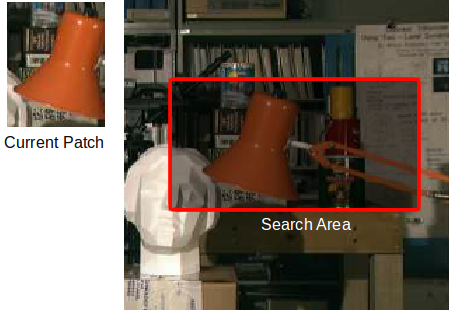

The theory is really intuitive, however to find the correspondent pixels is the main challenge for this problem. To find the correspondent points we are going to use the block matching algorithm. The block matching algorithm will get a region in the first image and try to find the most similar region in the second image using metrics.

In this tutorial we are going to implement a version which will only search in the left direction (assuming the cameras are in the same height and the second camera is at the right position) and sum of absolute difference as metric. There are two parameters to implement the block matching algorithm, the size of the block and the maximum disparity. The maximum disparity means the maximum number of pixels which will be searched. The bigger the block size the more accurate our result is, however it is computationally more expensive.

###Implementation

This algorithm can be implemented in parallel using VisionCpp since the search is executed independently for each pixel.

Like all VisionCpp examples, first we need to initialize our terminal nodes. In the case of this example, we have the left and right images as input and the depth map as output.

// store the left image in the terminal node

auto in_l =

visioncpp::terminal<visioncpp::pixel::U8C1, COLS, ROWS,

visioncpp::memory_type::Buffer2D>(input_l.data);

// store the right image in the terminal node

auto in_r =

visioncpp::terminal<visioncpp::pixel::U8C1, COLS, ROWS,

visioncpp::memory_type::Buffer2D>(input_r.data);

// the node which gets the output data

auto out =

visioncpp::terminal<visioncpp::pixel::U8C1, COLS, ROWS,

visioncpp::memory_type::Buffer2D>(output.get());Now we need to create the nodes that will convert the image to float and merge the images in a node, so we are able to access the same pixel from both images for the implementation of the block matching kernel.

// convert images to float

auto fgrey_l = visioncpp::point_operation<visioncpp::OP_U8C1ToFloat>(in_l);

auto fgrey_r = visioncpp::point_operation<visioncpp::OP_U8C1ToFloat>(in_r);

// merge images

auto merge =

visioncpp::point_operation<visioncpp::OP_Merge2Chns>(fgrey_l, fgrey_r);The next step is to create the node of our node which will compute the depth map. The neighbour_operation has 4 parameters related to the size of the halo (Top, Left, Right, Bottom), since we just search in the left, we need to add this halo of the search.

// compute depth map

auto depth = visioncpp::neighbour_operation<

Stereo_BMA, halfBlock, halfBlock + maxDisp, halfBlock, halfBlock>(

merge);To implement the block matching algorithm, two auxiliary functions were created for a clear implementation. We implemented the sum of the absolute differences (SAD) to get the best match and the getBlock function that gets the block from current pixel position.

struct Stereo_BMA {

// function that computes the sum of absolute difference of two blocks

float SAD(const float im1[blockSize * blockSize],

const float im2[blockSize * blockSize]) {

float r = 0;

for (size_t i = 0; i < blockSize * blockSize; i++) {

r += cl::sycl::fabs(im1[i] - im2[i]);

}

return r;

}

// Function to get block of size blockSize from (c,r) pixel

template <typename T>

void getBlock(const T& I, const int& c, const int& r, const int& layer,

float block[blockSize * blockSize]) {

int cnt = 0;

for (int i2 = -halfBlock; i2 <= halfBlock; i2++) {

for (int j2 = -halfBlock; j2 <= halfBlock; j2++) {

block[cnt++] = I.at(c + i2, r + j2)[layer];

}

}

}

};The functor of the block matching algorithm is shown below. The block matching algorithm gets the current block from the first image and computes the SAD from the blocks from the second image getting the location of the second image with lowest SAD. It return the disparity values which is the difference between the current pixel and the best SAD location.

// Stereo block matching algorithm for depth map reconstruction

struct Stereo_BMA {

// function that computes the depth map

template <typename T>

visioncpp::pixel::U8C1 operator()(const T& I) {

// get block from left image

float block_l[blockSize * blockSize];

getBlock(I, I.I_c, I.I_r, 0, block_l);

// start with best sum of absolute difference equals to infinity

float bestSAD = std::numeric_limits<float>::infinity();

int bestJ = I.I_c;

// for loop to find best match

float block_r[blockSize * blockSize];

for (int m = 0; m < maxDisp; m++) {

// get block from right image

getBlock(I, I.I_c - m, I.I_r, 1, block_r);

// sum of absolute difference

float temp = SAD(block_l, block_r);

// store the smallest SAD

if (temp < bestSAD) {

bestSAD = temp;

bestJ = I.I_c - m;

}

}

// Compute disparity value

return visioncpp::pixel::U8C1(I.I_c - bestJ);

}

};After computing the depth map, we scale the depth values for a better visualization of the result. To get the real measurement of the depth map we need to calibrate the camera first, but in this example we are just showing the implementation of the block matching algorithm.

// scale to display

auto scale_node = visioncpp::terminal<float, visioncpp::memory_type::Const>(

static_cast<float>(8.0f));

auto display =

visioncpp::point_operation<visioncpp::OP_Scale>(depth, scale_node);The last step of the VisionCpp examples is always to assign the result to a terminal node and execute the expression tree.

// assign to the output

auto exec = visioncpp::assign(out, display);

// execute expression tree

visioncpp::execute<visioncpp::policy::Fuse, SM, SM, SM, SM>(exec, dev);Now we can display our results.

// Display results

cv::imshow("Image left", input_l);

cv::imshow("Image right", input_r);

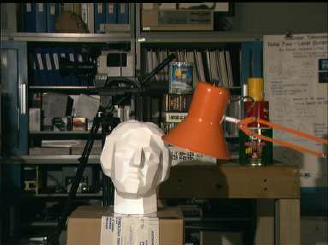

cv::imshow("Depth Map", outputImage);###Result In the image below we can see the left image, right image, and the depth map computed using the block matching algorithm. The brighter is the color of the result image, closer it is to the camera. The parameters used were 11 for block size and 25 for maximum disparity.

| Image Left | Image Right |

|---|---|

|

|

| Result |

|---|

|