diff --git a/docs/foundation/paligemma.md b/docs/foundation/paligemma.md

new file mode 100644

index 000000000..94b886405

--- /dev/null

+++ b/docs/foundation/paligemma.md

@@ -0,0 +1,140 @@

+PaliGemma is a large multimodal model developed by Google Research.

+

+You can use PaliGemma to:

+

+1. Ask questions about images (Visual Question Answering)

+2. Identify the location of objects in an image (object detection)

+3. Identify the precise location of objects in an imageh (image segmentation)

+

+You can deploy PaliGemma object detection models with Inference, and use PaliGemma for object detection.

+

+### How to Use PaliGemma (VQA)

+

+Create a new Python file called `app.py` and add the following code:

+

+```python

+import inference

+

+from inference.models.paligemma.paligemma import PaliGemma

+

+pg = PaliGemma(api_key="YOUR ROBOFLOW API KEY")

+

+from PIL import Image

+

+image = Image.open("image.jpeg") # Change to your image

+

+prompt = "How many dogs are in this image?"

+

+result = pg.predict(image,prompt)

+```

+

+In this code, we load PaliGemma run PaliGemma on an image, and annotate the image with the predictions from the model.

+

+Above, replace:

+

+1. `prompt` with the prompt for the model.

+2. `image.jpeg` with the path to the image in which you want to detect objects.

+

+To use PaliGemma with Inference, you will need a Roboflow API key. If you don't already have a Roboflow account, sign up for a free Roboflow account.

+

+Then, run the Python script you have created:

+

+```

+python app.py

+```

+

+The result from your model will be printed to the console.

+

+### How to Use PaliGemma (Object Detection)

+

+Create a new Python file called `app.py` and add the following code:

+

+```python

+import os

+import transformers

+import re

+import numpy as np

+import supervision as sv

+from typing import Tuple, List, Optional

+from PIL import Image

+

+image = Image.open("/content/data/dog.jpeg")

+

+def from_pali_gemma(response: str, resolution_wh: Tuple[int, int], class_list: Optional[List[str]] = None) -> sv.Detections:

+ _SEGMENT_DETECT_RE = re.compile(

+ r'(.*?)' +

+ r'' * 4 + r'\s*' +

+ '(?:%s)?' % (r'' * 16) +

+ r'\s*([^;<>]+)? ?(?:; )?',

+ )

+

+ width, height = resolution_wh

+ xyxy_list = []

+ class_name_list = []

+

+ while response:

+ m = _SEGMENT_DETECT_RE.match(response)

+ if not m:

+ break

+

+ gs = list(m.groups())

+ before = gs.pop(0)

+ name = gs.pop()

+ y1, x1, y2, x2 = [int(x) / 1024 for x in gs[:4]]

+ y1, x1, y2, x2 = map(round, (y1*height, x1*width, y2*height, x2*width))

+

+ content = m.group()

+ if before:

+ response = response[len(before):]

+ content = content[len(before):]

+

+ xyxy_list.append([x1, y1, x2, y2])

+ class_name_list.append(name.strip())

+ response = response[len(content):]

+

+ xyxy = np.array(xyxy_list)

+ class_name = np.array(class_name_list)

+

+ if class_list is None:

+ class_id = None

+ else:

+ class_id = np.array([class_list.index(name) for name in class_name])

+

+ return sv.Detections(

+ xyxy=xyxy,

+ class_id=class_id,

+ data={'class_name': class_name}

+ )

+

+prompt = "detect person; car; backpack"

+response = pali_gemma.predict(image, prompt)[0]

+print(response)

+

+detections = from_pali_gemma(response=response, resolution_wh=image.size, class_list=['person', 'car', 'backpack'])

+

+bounding_box_annotator = sv.BoundingBoxAnnotator()

+label_annotator = sv.LabelAnnotator()

+

+annotatrd_image = bounding_box_annotator.annotate(image, detections)

+annotatrd_image = label_annotator.annotate(annotatrd_image, detections)

+sv.plot_image(annotatrd_image)

+```

+

+In this code, we load PaliGemma run PaliGemma on an image, and annotate the image with the predictions from the model.

+

+Above, replace:

+

+1. `prompt` with the prompt for the model.

+2. `image.jpeg` with the path to the image in which you want to detect objects.

+

+To use PaliGemma with Inference, you will need a Roboflow API key. If you don't already have a Roboflow account, sign up for a free Roboflow account.

+

+Then, run the Python script you have created:

+

+```

+python app.py

+```

+

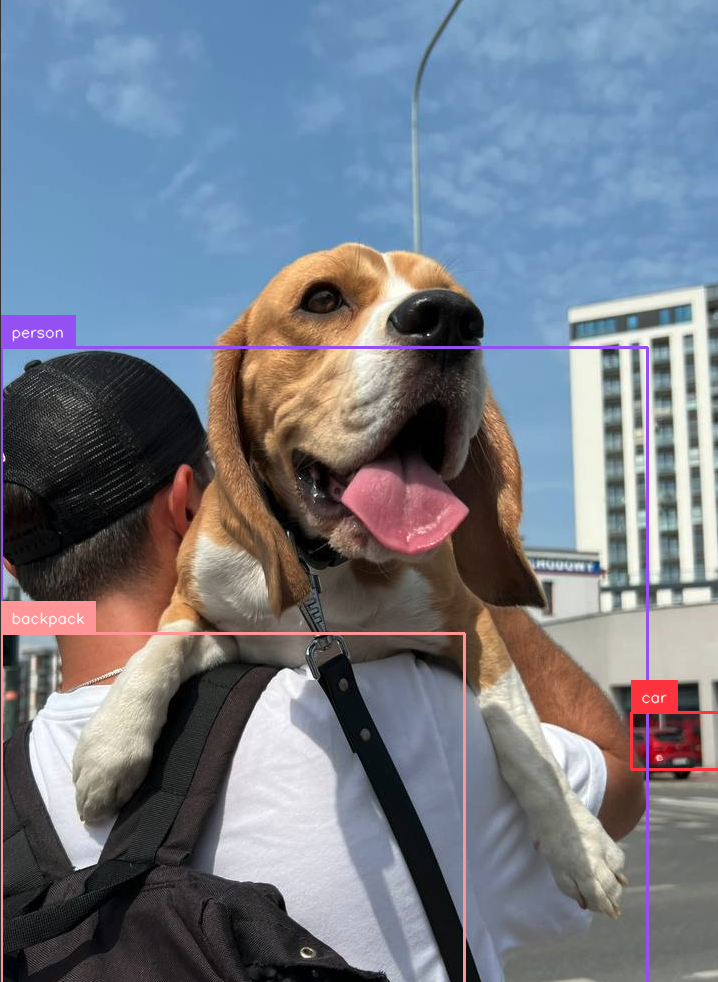

+The result from the model will be displayed:

+

+

diff --git a/docs/quickstart/licensing.md b/docs/quickstart/licensing.md

index 7578f4723..accf9d4f6 100644

--- a/docs/quickstart/licensing.md

+++ b/docs/quickstart/licensing.md

@@ -1,24 +1,3 @@

## Inference Source Code License

-The Roboflow Inference code is distributed under an Apache 2.0 license.

-

-## Using Models Hosted on Roboflow

-

-To use a model hosted on Roboflow for commercial purposes, you need a Roboflow Enterprise license.

-

-Contact the Roboflow sales team to inquire about an enterprise license.

-

-## Model Code Licenses

-

-The models supported by Roboflow Inference have their own licenses. View the licenses for supported models below.

-

-| model | license |

-| :------------------------ | :-----------------------------------------------------------------------------------: |

-| `inference/models/clip` | MIT |

-|`inference/models/gaze` | MIT, Apache 2.0 |

-| `inference/models/sam` | Apache 2.0 |

-| `inference/models/vit` | Apache 2.0 |

-| `inference/models/yolact` | MIT |

-| `inference/models/yolov5` | AGPL-3.0 |

-| `inference/models/yolov7` | GPL-3.0 |

-| `inference/models/yolov8` | AGPL-3.0 |

\ No newline at end of file

+See [Roboflow Licensing](https://inference.roboflow.com/quickstart/licensing/) for information on how Inference and models supported in Inference are licensed.

diff --git a/mkdocs.yml b/mkdocs.yml

index 1442529db..f1508f483 100644

--- a/mkdocs.yml

+++ b/mkdocs.yml

@@ -43,27 +43,27 @@ nav:

- YOLOv7: fine-tuned/yolov7.md

- YOLOv5: fine-tuned/yolov5.md

- YOLO-NAS: fine-tuned/yolonas.md

+ - Foundation Model:

+ - What is a Foundation Model?: foundation/about.md

+ - CLIP (Classification, Embeddings): foundation/clip.md

+ - CogVLM (Multimodal Language Model): foundation/cogvlm.md

+ - DocTR (OCR): foundation/doctr.md

+ - Grounding DINO (Object Detection): foundation/grounding_dino.md

+ - L2CS-Net (Gaze Detection): foundation/gaze.md

+ - PaliGemma: foundation/paligemma.md

+ - Segment Anything (Segmentation): foundation/sam.md

+ - YOLO-World (Object Detection): foundation/yolo_world.md

- Run a Model:

- Predict on an Image Over HTTP: quickstart/run_model_on_image.md

- Predict on a Video, Webcam or RTSP Stream: quickstart/run_model_on_rtsp_webcam.md

- Predict Over UDP: quickstart/run_model_over_udp.md

- Keypoint Detection: quickstart/run_keypoint_detection.md

-

- Deploy a Model:

- Configure Your Deployment: https://roboflow.github.io/deploy-setup-widget/results.html

- How Do I Run Inference?: quickstart/inference_101.md

- What Devices Can I Use?: quickstart/devices.md

- Retrieve Your API Key: quickstart/configure_api_key.md

- - Model Licenses: quickstart/licensing.md

- - Foundation Model:

- - What is a Foundation Model?: foundation/about.md

- - CLIP (Classification, Embeddings): foundation/clip.md

- - CogVLM (Multimodal Language Model): foundation/cogvlm.md

- - DocTR (OCR): foundation/doctr.md

- - Grounding DINO (Object Detection): foundation/grounding_dino.md

- - L2CS-Net (Gaze Detection): foundation/gaze.md

- - Segment Anything (Segmentation): foundation/sam.md

- - YOLO-World (Object Detection): foundation/yolo_world.md

+ - Model Licenses: https://roboflow.com/licensing

- Workflows:

- What is a Workflow?: workflows/about.md

- Understanding Workflows: workflows/understanding.md